Modest Critique of the ARC Prize

The ARC Prize ↗ is an interesting initiative. The Abstraction and Reasoning Corpus is an AI benchmarking suite where humans have maintained a strong dominance over machines. This makes it somewhat unique, because the overall trend has been the opposite - AIs catching and surpassing human performance on more or less every task.

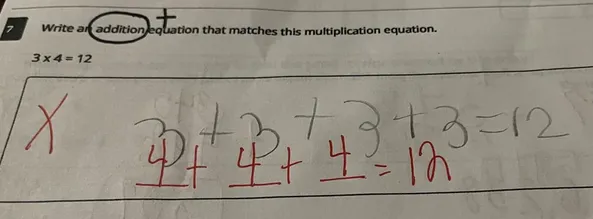

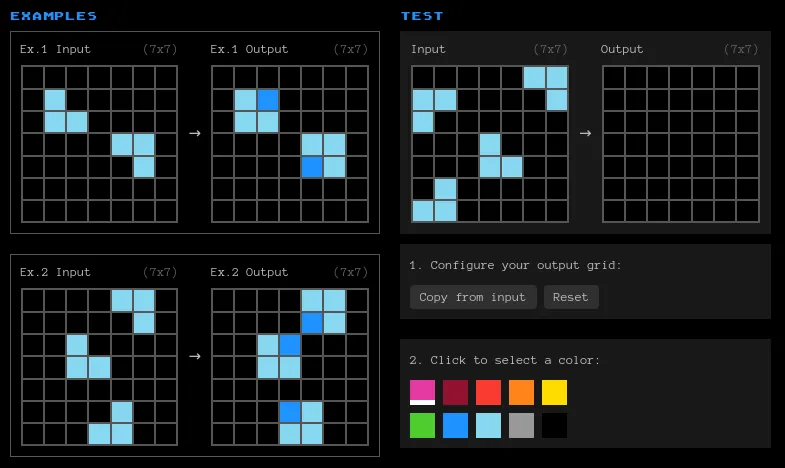

The corpus is made up of little visual transformation puzzles. Each puzzle provides a few example transformations (to learn the transformation logic from) and a test input, which the AI (or human) is supposed to transform into an output. In the below example, the solution is to "fill in" the missing corners of each 2x2 square with the darker blue.

The authors contend that solving the ARC corpus represents a step change improvement in the quest toward AGI. Maybe! Here I offer a couple of (modest, tentative) criticisms of the benchmark.

Says Who? (False Negatives)

Suppose someone offered you a million dollar prize to answer the following: What's the next number in the sequence ?

Ready? Here it is. The sequence above are values of the curve at . The correct answer, , is .

Did you win?

The answer given here is a bit of a stretch. But it exemplifies something: loosely specified questions can have many answers, whose legitimacy ultimately depends on the taste of the authority (whoever holds a red pen). Even many here is an understatement. If you happen to know something about polynomial interpolation ↗, you'll know that there are infinitely many defensible "solutions" to the above problem.

This subtle assumption, that the questioner's answer is unconditionally better than the responder's, is embedded in the evaluation process for the ARC prize. But in evaluating differently architected intelligences, shouldn't we expect, and in some capacity make allowance for, some radically different tastes? As is, these intelligences have no appeals process.

Is feels worthwhile to take a close look at a sample of rejected solutions from the current SOTA submissions. How likely are we to find reasonably defensible solutions that were rejected?

Cherry-Picking for Meatbags

Recall ARC's distinguishing feature among benchmarks is that humans still dominate machines. But this feels slightly less impressive when considering how finely curated the problems are.

There is a very broad space of (matrix)->(matrix) transformations, but the ARC corpus contains, more or less, the specific subset of problems fit for human consumption: problems characterized by visual perception, object permanence, and consistent agent behaviour (some puzzles have structures that act as controls - eg, a red X will turn the neighbor block red, a blue X will turn the neighbor block blue). These are more or less the things that evolution has fine-tuned the animal kingdom to be very good at; but it's not obvious to me that we should consider this a gold-standard as being "generalized". Isn't it just specific to meatbags? Can we over-fit the definition of intelligence?

It feels trivial to construct ARC problems that are machine-easy, human-hard. One such example: the output matrix is a 1x1 matrix whose value is the sum of the input matrix (modulo NUM_COLORS). If I were kidnapped by an alien AI, subjected to these machine-friendly puzzles, and declared unintelligent, it'd feel pretty unfair.

I'm sympathetic to the notion that an AGI ought to outperform humans at any given task, but I think the framing of the ARC prize overstates the degree to which humans are dominant here. If the problem set were evenly distributed across the entire space of possible problems, I expect the scoreboard would look different.